From Observability to Organizational Resilience

By FormWave Collective — Christa Bianchi, Derrick Cash, and Ray Palmer Foote

This article represents the shared thinking and lived experiences of FormWave Collective—a collaboration between professionals across branding, innovation, anthropology, and digital strategy—committed to surfacing signal, shaping what moves us, and reframing the future of work.

Summary:

Resilience comes from culture, not dashboards. Most failures start with ignored trust signals, not technical faults. Real observability includes systems, leadership, and culture. Track soft signals like values drift and psychological safety, not just uptime. Organizations become resilient when they learn quickly, act on signals, and make transparency safe.

If the last few years have taught us anything, it’s that systems don’t fail, cultures do. The outages, ethical crises, and AI missteps that make headlines rarely start with a technical fault. They begin with a quiet erosion of trust, missed signals, and decisions made in haste because someone’s interpretation is that the dashboard told the full story.

Most organizations mistake visibility for resilience. We watch graphs, monitor uptime, and can even tell when a system slows down, let alone is offline entirely. However, these efforts largely fail to acknowledge behaviors, trust, and when our culture is silently grinding to a halt.

Dashboards can alert you to an outage. They won’t warn you that your workforce no longer believes in your strategy. And when resilience is on the line, it’s culture, not code, that breaks first.

If you want real resilience, remember this:

Leadership creates the conditions; culture makes them real. Without both, observability is just more dashboards.

Redefining Resilience

I define resilience not as recovery from failure, but as the ongoing capacity to adapt while staying true to values. It’s less about bouncing back and more on learning forward. True resilience means that even when technology fails, your people, processes, and principles sustain.

And yet, many organizations still equate monitoring with mastery. We’re good at observing systems, but not ourselves. We track uptime, latency, and accuracy, but rarely track trust alignment, ethical drift, or adoption confidence.

Observability without humanity is blindness.

It’s time to evolve our notion of observability from metrics to meaning, from what we can see to what we can sense.

The Observability Triangle for Resilience

Think of resilience as a triangle that connects three domains:

- Systems (telemetry & KPIs): what you can see.

- Leadership (strategy & incentives): what you choose to look for and act on.

- Culture (norms & behaviors): how people actually behave when they see it.

Failures usually occur at the interfaces:

Great telemetry + weak leadership → signals ignored.

Imagine a ship outfitted with state-of-the-art radar, every storm tracked, every iceberg detected. But the captain hesitates, waiting for clearer confirmation before steering. The crew sees the warnings but follows orders to stay the course. The ship doesn’t sink because of bad technology, it sinks because no one decided to act.

Organizations often have excellent data, dashboards, and analytics pipelines, but leadership delays or avoids action when signals challenge the narrative of success. Insight without courage becomes noise.

Clear strategy + brittle culture → signals suppressed or gamed.

The conductor has written a perfect composition, every note, every tempo marked with precision. But the musicians have learned that mistakes are punished, not corrected. So, they play softly, fake confidence, and skip the hard parts when the conductor isn’t listening. The score is beautiful, but what the audience hears is lifeless.

When a culture fears scrutiny, data is sanitized, metrics are massaged, and leaders only hear harmony when they should be hearing tension. Strategy without psychological safety breeds quiet dysfunction.

Healthy culture + vague metrics → intuition without evidence.

Everyone trusts one another. The mood is upbeat. They help each other over rocks, share food, and move as one. But without a compass (or a map) they wander in circles, mistaking collaboration for progress. Good intentions can’t substitute for direction.

Even a healthy culture can’t thrive without clarity. When metrics are vague or missing, even the most cohesive teams rely on gut feel. Alignment without measurement drifts into complacency.

Most dashboards live in the first corner (Systems) and that’s where observability has stopped evolving. But resilience depends on how well leadership and culture connect to those systems.

Leadership’s Role

Leaders decide what gets instrumented, why it matters, who owns it, and what behaviors are rewarded when signals show friction. When incentives fixate on uptime or velocity alone, soft signals (like trust erosion, values drift, and resistance to change) remain invisible.

Leaders also set the overstory – the narrative that tells people how to interpret signals and act. If the story is “move fast,” teams cut corners. If the story is “learn fast,” they build guardrails and share lessons.

Good observability begins with leadership choosing what’s worth noticing.

Culture’s Role

Culture determines how people respond to signals; escalate or hide, learn or blame, adapt or resist. You can have perfect dashboards and still fail if your culture punishes candor, shortcuts review, or treats guardrails as red tape.

A resilient culture values transparency over optics and learning over blame. It listens to friction instead of silencing it. It celebrates the moment someone raises a red flag, not just the moment the issue is resolved.

Culture, more than code, determines whether observability becomes foresight or surveillance.

Quick Diagnostic

Ask yourself:

- Leadership: Are soft-signal KPIs (trust, contestation, values drift) on your executive dashboard, with owners and thresholds?

- Culture: Do people feel safe flagging misalignment? Do near-misses become shared lessons within days or months?

- Systems: Do your technical and human metrics live in the same review cadence?

If the answer to any of these is “no,” your observability strategy is really just surveillance; watching instead of learning.

If you’re trying to observe to become resilient, not just compliant, this framework is for you.

At the end of the day, observability is about what you learn and how quickly you act when you see the truth. Start small but signal big:

- Put soft-signal KPIs beside uptime and time to resolution. Let “trust alignment” trend next to “system reliability.”

- Align incentives to learning. Track “incident learning half-life” and the quality of postmortems as performance indicators.

- Refresh your overstory. Frame guardrails as instruments of purpose, not policing. Resilience is a story people tell themselves about why it’s safe to speak up and smart to adapt.

Reframing Observability

In DevOps, observability helps us infer internal state from external outputs – logs, metrics, traces. But this only tells us how systems behave, not why they behave that way, nor how people are responding to them.

In AI-driven enterprises, technical observability without human observability creates a false sense of control. We know when a pipeline breaks but not when morale cracks. We monitor model drift but ignore value drift.

That’s why I believe in a multi-layer observability stack:

- Technical: Performance, accuracy, latency, model drift.

- Human: Trust, adoption, feedback loops, psychological safety.

- Ethical/Governance: Fairness, explainability, accountability, and adherence to Responsible AI guardrails.

Each layer provides unique signals, and resilience only emerges when these layers communicate.

As someone who has led AI modernization projects across healthcare and government, I’ve seen the difference firsthand: the most stable systems are the ones where the humans trust the data and the data reflects the humans behind it. This is clearly aligned with frameworks like the MIT SLOAN / CSAIL ‘AI Implications for Business Strategy’.

The Hidden Signals

Every crisis begins with ignored whispers

McKinsey found that 70% of large-scale transformations fail due to culture, not technology. Edelman’s 2024 Trust Barometer shows that 59% of employees distrust AI-driven decisions, even in organizations with strong ethical policies.

Those are the hidden signals, the weak but vital indicators that resilience is decaying.

- Values Drift: Teams prioritize speed or efficiency over fairness, skipping human review “just this once.” Over time, small exceptions become standard practice.

- Trust Erosion: CSAIL research on explanation alignment shows that humans distrust AI when model explanations don’t match intuitive reasoning, even when accuracy remains high. Misalignment quietly kills adoption.

- Resistance to AI: This one is often misunderstood. Shadow AI isn’t rebellion – it’s feedback.

When employees use unsanctioned tools or personal systems to get their work done, they’re not rejecting governance; they’re signaling that the organization hasn’t kept up with how work really happens.

It’s not unlike government officials who have resorted to using personal email accounts or nonstandard collaboration tools to navigate institutional rigidity — technically within the rules, but culturally outside them. That behavior exposes something deeper: a gap between policy and practicality. It reflects the human drive to share, collaborate, and adapt faster than systems allow.

Sometimes these acts are reckless. Other times they’re resourceful. Either way, they’re data. They tell us where innovation is trying to surface, where trust is stretched, and where leadership hasn’t yet created safe lanes for experimentation.

In large enterprises, this is known as the Mini-CIO Syndrome (shadow IT); pockets of unofficial innovation where individuals create their own tech stacks, workflows, or automations because central IT is too slow to respond. It’s both a risk and an opportunity: unmanaged, it fragments the enterprise; guided, it reveals the next generation of resilient behaviors.

Culture, after all, is the measure of difference between people and how we collaborate. The healthier the culture, the more gracefully it absorbs difference and turns friction into foresight.

Building a Soft-Signal KPI Framework

When we introduced the concept of soft-signal KPIs, our intent was to give leaders language and data for what they already feel.

Imagine a “Signals Radar” beside your dashboards:

| KPI | What It Measures | Why It Matters |

| Explanation Alignment Score | Agreement between human and model reasoning | Predicts trust and transparency risk |

| Contestation Rate | Frequency of AI overrides or disagreements | Reflects engagement and oversight health |

| Shadow AI Index | Unofficial AI tool usage | Reveals unmet needs and cultural friction |

| Values Drift Delta | Policy exceptions vs. principles | Tracks ethical erosion early |

| Learning Velocity | Time from incident to lesson adoption | Measures cultural agility |

Together, these metrics form a Signals Radar: a dashboard for resilience itself based on digital trust frameworks. Instead of waiting for crises, leaders can track early warning signs in trust, culture, and ethics.

This is observability for the human side of the enterprise, where foresight becomes measurable.

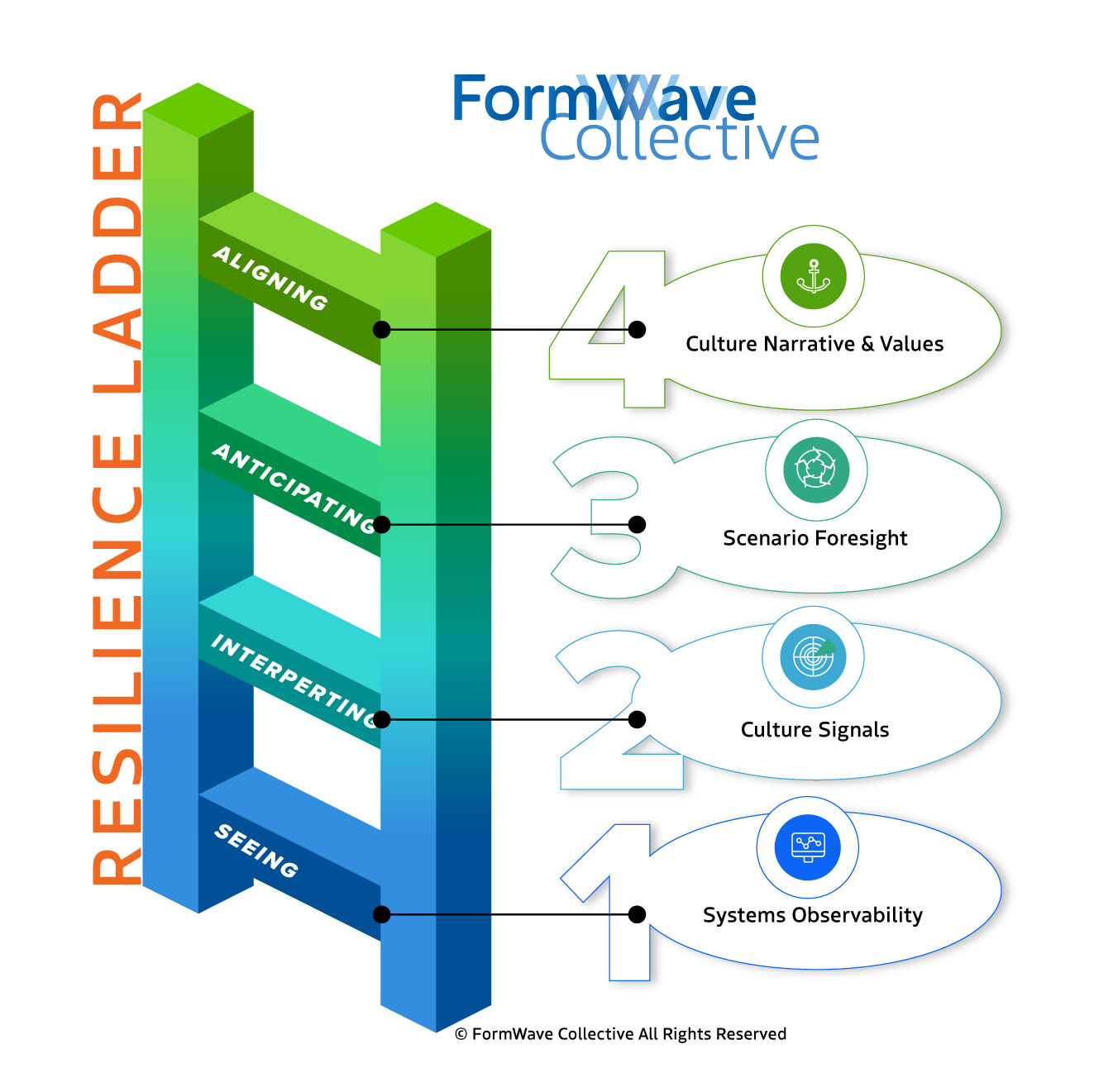

The Observability → Resilience Ladder

As I remind my team, “Dashboards are what you look at; foresight is what you look through.”

To move from observability to foresight, organizations climb what I call the Resilience Ladder:

- Dashboards = Seeing (Visibility into systems)

- Signals Radar = Interpreting (Visibility into people and culture)

- Foresight Loops = Anticipating (Scenario planning and simulation)

- Cultural Anchoring = Aligning (Embedding resilience in narrative and values)

Most organizations stall at Step 1. The leap to Steps 3 and 4 – anticipation and alignment – requires something dashboards alone can’t offer: a new overstory.

Practical Actions for AI Foresight

From metrics to meaning, turning observability into transformation.

Culture doesn’t change through instrumentation alone. It changes through story, the shared overstory that shapes how people interpret what they see and decide how to act.

You can implement the processes in this article, but without a narrative that reminds people why resilience matters, they’ll feel mechanical. The power of foresight lies in combining data with meaning – metrics that move hearts as well as dashboards.

Here’s how to start building that bridge between process and purpose:

1. Establish Guardrails and Narratives Together

The first step is defining what responsible freedom looks like.

MIT CSAIL’s Responsible AI Guardrails framework outlines six pillars: Fairness, Reliability, Privacy, Transparency, Sustainability, and Accountability. These aren’t just compliance checkboxes; they’re the moral architecture of digital resilience.

Leaders should pair each guardrail with a story – a human reason that makes it matter. For example:

- Fairness isn’t about statistical parity; it’s about dignity.

- Reliability isn’t uptime; it’s trustworthiness.

- Transparency isn’t disclosure; it’s confidence through clarity.

When guardrails are introduced as shared principles instead of constraints, they invite participation rather than resistance. They become part of the organization’s moral overstory: the narrative that gives purpose to precision.

2. Red-Team Your Culture

The concept of “red teaming” comes from military strategy and cybersecurity, where teams simulate attacks to expose weaknesses. In recent years, it’s been adopted by AI safety research and organizational psychology alike, because no culture is self-aware without challenge.

A Cultural Red Team works the same way: it stress-tests values under pressure.

- Invite employees from diverse departments, roles, and risk tolerances.

- Pose uncomfortable scenarios: a data bias scandal, a tough ethical trade-off, or a failed AI launch.

- Observe how teams respond. Who speaks up? Who stays silent? Which principles hold? Which fracture?

The goal is to make awareness a habit. Just as cybersecurity red teams strengthen defenses, cultural red teams strengthen conscience. They reveal blind spots before the world does.

3. Run Foresight Sprints, Not Just Retros

In agile development, retrospectives look backward. Foresight sprints look forward. They draw on disciplines like strategic foresight and scenario planning; approaches refined by the Institute for the Future (IFTF) and futurists like Peter Schwartz.

A foresight sprint combines data, imagination, and decision-making:

- Use your Signals Radar to identify emerging weak signals in culture, trust, or ethics.

- Ask: If this trend continues, what could it mean for us six months from now?

- Build two or three mini-scenarios (best case, worst case, next case) and decide how you’d respond.

These sprints transform metrics into muscle memory. They teach teams to think in time and tasks. The process turns observability into foresight, and foresight into leadership capacity.

4. Close the Learning Loop

Every organization claims to “learn from mistakes,” but few measure how long that learning takes to spread. That’s why we track incident learning half-life – the time between identifying a lesson and institutionalizing it.

In resilient organizations, lessons travel faster than mistakes. To make that happen:

- Treat every postmortem as a design opportunity, not a debrief.

- Assign explicit owners for “lesson dissemination.”

- Audit what’s actually changed after each incident, not just what was discussed.

This approach echoes the NIST AI Risk Management Framework principle of “Manage through iteration.” The faster your learning loop closes, the stronger your organizational immune system becomes.

5. Use Tools as Mirrors, Not Monitors

Finally, remember that observability tools are mirrors for reflection, not monitors for control.

Our AI Readiness Lens is one example of a framework built for introspection, not inspection. It helps organizations identify cultural blind spots and resilience gaps, without turning human behavior into surveillance.

When tools are used as mirrors, they invite curiosity. When they’re used as monitors, they invite compliance. The difference is whether people feel seen or watched.

Leaders who understand this distinction build trust instead of tension.

The Throughline to Resilience: Turning Process Into Purpose

Every one of these practices (guardrails, red teams, foresight sprints, learning loops, diagnostic tools) serves a single aim: to align what we measure with what we mean.

Resilience isn’t achieved through process alone; it’s sustained by story. It’s what happens when the data we collect reinforces the values we profess.

Every organization is resilient, until it’s not.

Resilience isn’t a trait you acquire; it’s a discipline you maintain. It’s the daily practice of filtering signal from noise.

And here’s the truth: noise just happens. Distractions, competing priorities, performative busyness, these are constants of modern work. Not all noise is bad; some of it sparks innovation. But when we lose the discipline to focus on the signal, the truths that matter most, we drift into rigidity. And rigidity is the opposite of resilience.

In our earlier piece on Signal to Noise, we explored this idea: that resilience grows from the ability to discern, not to eliminate. The same is true here.

The signals are already there (trust metrics, cultural friction, ethical tension) you just have to decide whether you’ll listen or get lost in the noise.

So, start today. Ask your team: What signals are we missing right now?

The difference between a resilient organization and a fragile one isn’t who sees more, it’s who learns faster, and who acts when they finally see the truth — dashboards won’t save you. Culture will.

References

- McKinsey & Company.

“Why Do Most Transformations Fail? A Conversation with Harry Robinson.”

https://www.mckinsey.com/capabilities/people-and-organizational-performance/our-insights/why-do-most-transformations-fail-a-conversation-with-harry-robinson - Edelman.

Edelman Trust Barometer 2024.

https://www.edelman.com/trust/2024-trust-barometer - MIT CSAIL.

Explanation Alignment Research.

https://csail.mit.edu/news - CIO.com.

Is Shadow IT Something CIOs Should Worry About?

https://www.cio.com/article/230131/is-shadow-it-something-cios-should-worry-about.html#:~:text=Does%20the%20presence%20of%20shadow,get%20to%20a%20solution%20independently. - MIT CSAIL.

Responsible AI Guardrails Framework.

https://cap.csail.mit.edu/alliance/responsible-ai - MIT Sloan School of Management / MIT CSAIL.

AI: Implications for Business Strategy.

https://executive.mit.edu/course/ai-implications-for-business-strategy - Gartner Research.

Digital Trust Frameworks and Insights.

https://www.gartner.com/en/information-technology/insights/digital-trust - U.S. Army.

“Red Teaming Helps Leaders See the Big Picture.”

https://www.army.mil/article/150703/red_teaming_helps_leaders_see_the_big_picture - Institute for the Future (IFTF).

Strategic Foresight and Scenario Planning Resources.

https://www.iftf.org - Schwartz, Peter.

The Art of the Long View: Planning for the Future in an Uncertain World.

https://www.goodreads.com/book/show/49386.The_Art_of_the_Long_View - National Institute of Standards and Technology (NIST).

AI Risk Management Framework (AI RMF 1.0).

https://www.nist.gov/itl/ai-risk-management-framework - RADcube × FWC.

“Surfacing Signal: Finding Meaning in the Age of Noise” (Internal FWC Publication).

https://formwavecollective.com/surfacing-signal-finding-meaning-in-the-age-of-noise-ai/

The Rituals of Readiness: Trust, Meaning, and Cultural Change in the Age of Intelligence

This essay looks beneath those categories into the social and symbolic patterns that make alignment possible in the age of intelligence. While capability can be measured, alignment must be felt. That feeling of trust, participation, and belonging is not built through metrics but through rituals. Rituals are how humans metabolize change.

#InventorMode: How AI Is Reshuffling Work and Redefining Creative Expertise

If we focus on AI’s signal rather than all the noise, we can see its democratizing potential for creativity, entrepreneurship, and more. As described by author Sangeet Paul Choudary, there will be a global reshuffle — not merely optimization, but new and unseen coordinations with consequences for geopolitical power and the future of work.

The #Emergent Organization

Unchecked organizational culture can lead to entropy; combined with AI, this decay will only be accelerated. As you consider an operational redesign, reconsider the process and become emergent — rewrite your playbook around the equity in your brand and the value in what you care about to change the organizational operations and culture from within. Become emergent.